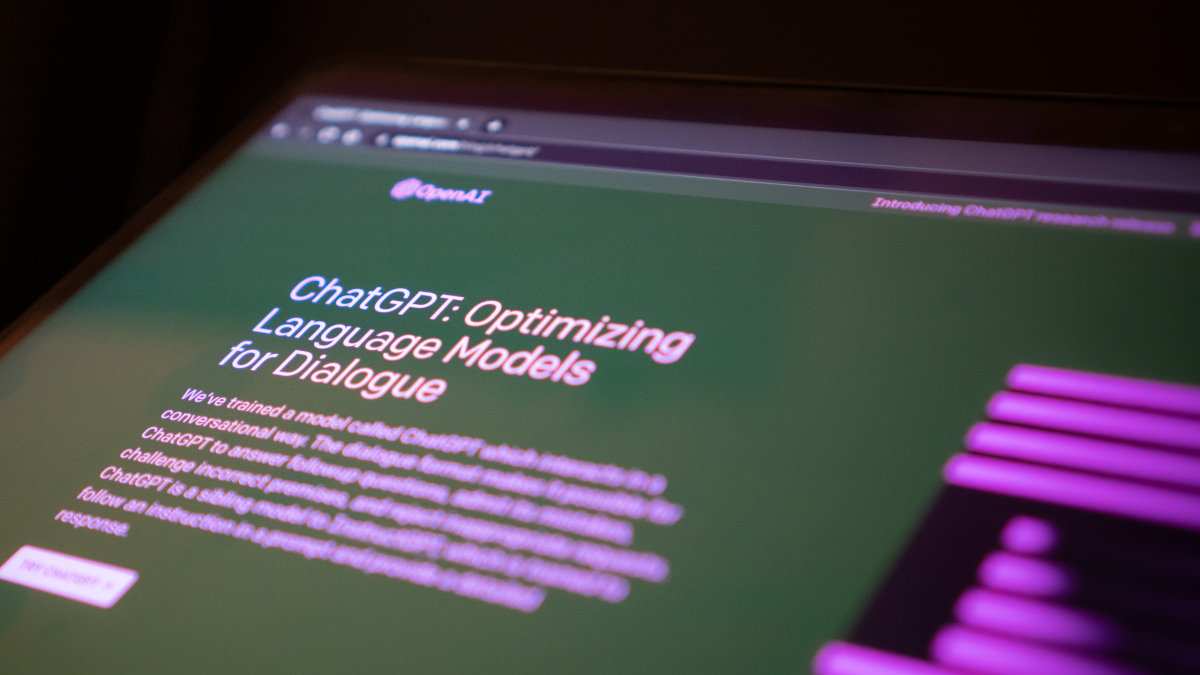

I remember chatGPT’s launch as a hallmark of the end of simpler times. A time when my LinkedIn was filled with reposted stories of people’s own imagined benevolence. Before my FYP page was AI generated SEO seeding strategy. When pub beer gardens weren’t filled with murmured notions of being tech-ed out of the job.

The initial hype was filled with whimsy — ‘write me an iambic pentameter poem about a horse’. But with the ability to automate tasks and surface useful information, we’re firmly in the hype phase of AI chat. Now, with Bing and Google developing their own versions, it’s set to take a central place in how we find information day-to-day.

This isn’t an ode to AI in fact, there are lots of examples of the huge biases in its training data seeping through its safeguards, or its just being plain creepy. But that’s a conversation for another time. Because this month’s theme is ‘hope’. And I’m feeling very hopeful about what conversational AI can teach us about working better with humans.

Clearer briefs, better outcomes — but this isn’t limited to machines

As chatGPT took off, people began to push past the fanciful, and look to its ability to solve bigger problems. As people looked for more nuanced answers, they needed to push the AI to think beyond the generic, into more imaginative territory. Specifying the output that they wanted, clarifying the role they wanted it to play, setting the tone of what the response should be. Because the AI works by predicting what the next word should be, it’ll automatically return the most obvious answers. So a more investigative approach yielded much better responses.

High quality inputs lead to high quality outputs. This seems incredibly obvious when it comes to computational systems. But it’s regularly ignored when it comes to humans. And it’s never more important than when writing briefs. How many times have we had a half-baked research project, or long and sprawling creative brief? So often the briefs we give and the questions we ask are flabby, cluttered, confused and dry. We wouldn’t inflict that on ChatGPT, so why are we inflicting it on ourselves?

AI better way to brief

Be single-minded, get to the crux and ditch the clutter

The best briefs can be condensed down to a single question. ‘How can we launch a small car in big car country?‘ Mmm chunky, cultural insight, simple, action led. And this is what people are learning about AI — brevity wins. They’re learning that the right questions to ask are directional, specific and ones that bring human curiousity to the foreground.

Put people in the mind of the audience

‘Answer as if you were an X’ has become a core way people are guiding AI. Training the algorithm to respond in the manner a certain person might bring their tone, voice and experience into the conversation. Audience perspective is core to a strong brief, if we can give people a window into the soul of the audience, we’ll guide authentic work that genuinely connects.

Approach the process with curiosity

With conversational AI, if the first answer doesn’t hit the mark, people find the follow-up. With human’s we tend to be more reserved, fearing we may seem ill-educated if we ask too many questions. Questioning spurs learning, fuels innovation and improves performance. Plus it makes us feel closer to one another too. Keep asking ‘why’ until you hit on something chunky.

It’s not over ‘til AI can crochet Mickey Mouse

We’re not machines, we’re humans. Which means (at least for now) we benefit from the powers of lateral thinking and creativity that are inaccessible to AI. We can pull separate threads together and come up with something totally new, and make connections between seemingly unrelated and far removed concepts. Our unique experiences give a cultural lens through which we can craft different responses. We’re not wired up to the mainframe, but our narrower view has power too.

We can be kinder to ourselves in the power of our minds, the uniquely human output. The AI isn’t winning creative prizes… yet.

Featured image: Jonathan Kemper / Unsplash