Like the mythical phoenix rising from the ashes, online misinformation seemingly emerges anew with each innovative attempt to extinguish it. Fake news is increasingly hijacking global narratives — from national politics to the Israel-Hamas conflict to Covid-19 — threatening the integrity of responsible journalism, the safety of consumers seeking factual information, and the reputation of brands whose ads are associated with these false sources.

Background to the rise in misinformation

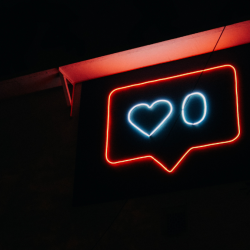

Firstly, consumers are increasingly drawn to user-generated content on social media platforms and are losing trust in official institutions. Secondly, generative AI tools are now widely available and can create dynamic fake content in seconds. Both of these factors have laid the groundwork for this evolution. While evolving forms of misinformation are making it harder for users to tell face from fiction, brands are also paying a price for not accurately identifying and avoiding it in their media plans.

Findings from MAGNA and Zefr indicate that 61% of consumers recall brands whose ads are associated with misinformation, with almost half perceiving the brand’s integrity as compromised in response.

In addition, the IAB Europe Guide to Brand Safety and Suitability states that nearly 90% of consumers believe it is a brand’s responsibility to ensure their ads are placed in suitable and safe environments. This is especially important given brands can keep distributors of misinformation in business by funding them through their ad spend.

So how can brands navigate this ever-changing online landscape, accurately identify and stifle complex sources of misinformation (or, at the very least, not fund them) to protect consumers, their reputation, and society at large?

What’s driving this evolution of misinformation?

Online misinformation has evolved significantly since 2017, from standard text into complex, dynamic, and increasingly convincing forms, including images and video. The rise of AI-powered deepfake technology has enabled the manipulation of video content to fabricate events or actions convincingly, making it increasingly difficult to discern fact from fiction. As a result, deepfakes have been listed as the most serious AI crime threat by the UK government.

Voice replication technology further compounds this issue, with advancements such as Microsoft’s Vall-E model capable of mimicking voices with just seconds of audio input. When combined, these technologies allow for the creation of sophisticated counterfeit audiovisual content.

Misinformation is also evolving with the use of more sophisticated dissemination techniques, including bots, fake accounts, and coordinated campaigns to spread false information rapidly across various online platforms. These techniques can be particularly challenging to identify if also using subtle distortions, mixing truths with falsehoods, or spreading misinformation in closed or encrypted channels to avoid identification. Furthermore, online echo chambers exacerbate polarisation further by reinforcing cognitive biases, or pre-existing beliefs, and isolating individuals from diverse perspectives, creating fertile ground for the spread of misinformation.

Innovative tools for new fact-checking challenges

Online misinformation has wide-ranging negative consequences for both consumers and brands. Consumers who encounter fake content can be misled into purchasing products based on falsified information, or abandoning brands because of negative untruths shared about them.

This all contributes to a loss of trust in reputable organisations. Similarly, brands that become entangled with fake content can end up losing existing and prospective customers, and harming brand image and stock value.

However, while the technology behind the proliferation of fake content and misinformation continues to progress, thankfully, so do the tools to tackle it. Studies have shown that fact-checking efforts can have a significantly positive influence on individuals’ factual understandings and durably reduce belief in misinformation. Misinformation warning labels online have also proven effective, even for those who are distrusting of them.

Brands that partner with fact-checking organisations to provide real-time verification badges on their marketing materials can build more transparent relationships with their audiences. They could also instil further trust by providing dedicated FAQ sections, backed up by fact checks, to debunk misconceptions about their content.

There have been major developments in cutting-edge, fact-checking technologies — including discriminative AI solutions that can understand the nuances, intent, and context of complex forms of content. Google’s Fact Check Explorer is one example of an AI-powered toolkit helping marketers easily access well-established fact checks published by reputable organisations, simplifying their moderation process.

For more complex video content, AI-driven solutions such as those offered by Zefr and IPG Mediabrand provide multi-modal misinformation detection capabilities to help marketers safely navigate user-generated content through robust pre-campaign categorisation and custom algorithms. Given that the detection capabilities of AI are still maturing, a more comprehensive understanding and identification of the nuanced aspects of online content can be achieved when using skilled human moderators, who work closely with prominent fact-checking organisations, to train these solutions.

Another effective method for brands to safeguard their ads from evolving forms of online misinformation is adhering to industry-wide standards that bring advertisers and publishers together under the latest content safety guidelines. A prominent example is the framework established by the Global Alliance for Responsible Media (GARM), which aids marketers in recognising and de-funding sources of online misinformation during their media planning. GARM, particularly, provides a thoroughly audited member verification list, allowing marketers to exclusively direct advertising spend towards reputable publishers that uphold the same standard of brand safety and suitability as them.

User-driven solutions such as customisable reporting buttons can also empower consumers to actively flag inappropriate or false content, enabling platforms to swiftly address brand safety concerns. Marketers can then integrate this data within their moderation systems to help avoid unsuitable content and gain greater control over their brand reputation.

While social platforms are implementing measures to combat misinformation, it also remains the responsibility of brands to ensure they are not unintentionally financing distributors of misinformation and facilitating its dissemination. Combining robust discriminative AI technology with human supervision provides the most secure approach to identifying and stifling fast-evolving misinformation at scale across online platforms. This approach can help social platforms in providing accurate fact-checking labels, brands in building fact-checking and suitability standards into their campaign planning and execution, and ultimately consumers in receiving safe and accurate content, reviving consumer trust and responsible media consumption online.

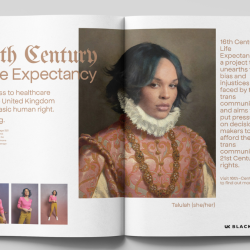

Featured image: Peter Lawrence / Unsplash