Last month one of the largest news organisations in the USA reached out about my tweets. They had seen some about how the recipe of the largest amounts of political advertising, affective polarization, modern media, and AI might bubble up into a weird cultural environment. It’s been nearly a decade since 2016. The organization was also concerned about the above.

In what remains of my naïveté I said ‘Well, you’ve had a cadre of media theorists, sociologists, psychologists, linguists, and PR experts working on this since 2016’. This was not the case.

What problem, exactly? It is a well known one, seen throughout history in different forms. It’s an exploit and a form of defense against mass manipulation. Whom to trust?

Modern marketing and public relations evolved out of propaganda

As we discovered in 2016, politicians have worked out that they can lie without consequences. Lying is the least sophisticated form of propaganda. The reason why it’s so prevalent is a function of the former guy over the pond. Historically, there were social bonds and boundaries that constrained the behaviour of lawmakers.

Politicians now feel free to say whatever they want. The media and opposition fact check, but that doesn’t seem to help. If anything it seems to make it worse by increasing the distribution and salience of the lie.

When I was at McCann we were proud of our slogan ‘Truth Well Told’. Obviously internally (and across the industry), we joked about ‘Lies Well Sold’ because it rhymes and taps into an anxiety that many in the industry feel. We should be bolstered by knowing it’s not true: advertising doesn’t lie. It is prohibited from doing so.

Sure there is puffery protection, where you can make outlandish metaphorical claims, but if you make a statement of fact in an advertisement and someone challenges it, then it goes to review by the ASA (in the UK). You can’t get away with saying things that are untrue.

The ASA does great work for our industry by self-regulating our promise as an industry to the nation — we don’t sell snake oil. It means people can trust the advertising they see.

Political advertising is not regulated, as we found out en masse in 2016

Politicians can say anything. This was previously constrained entirely by social convention it would seem. This year will see the largest amount of money ever deployed into political advertising, as several of the world’s largest democracies are in play, and the current context is fraught with tension. Yes, deepfakes of candidates saying whatever terrible thing will trigger whichever group being targeted will continue to appear, predominantly at a local level. But we’re also seeing the weirdness manifest in other ways: In the UK, an AI is running for local elections. ‘If it wins, “AI Steve” will be represented by businessman Steve Endacott in Parliament.’ (Honestly, I have no idea.)

On a media level, lots of invisible manipulation will be attempted. The deployment of specific kinds of targeting was the substance of the Cambridge Analytica scandal. In both the USA and UK there are no rules for how politicians use media or AI. The fear is that whatever happens in the elections many on either side won’t believe the outcome, because they have decided to trust the wrong sources — and that might have… consequences.

That said, state interventions have complex considerations. As Ciaran Martin, former chief executive of the National Cyber Security Centre, recently wrote: ‘If false information is so great a problem that it requires government action, that requires, in effect, creating an arbiter of truth. To which arm of the state would we wish to assign this task?’

This allusion to Orwell’s Ministry of Truth points out the problem: truth and trust are different

Humans don’t know very many things about the world directly. You know the Earth is an oblate spheroid in a heliocentric system held together by gravity. But you rely almost entirely on secondary information for this ‘knowledge’, which means you have implicitly made a set of decisions about whom to trust: teachers, academics, empiricism, peer review, certain media.

Other people believe the world was created 4000 years ago or that the world is flat because they trust different voices. This tends to be the most useful vector to distort and manipulate, which is why politically motivated think tanks exist. As Hannah Arendt famously wrote: ‘The ideal subject of totalitarian rule is not the convinced Nazi or the dedicated communist, but people for whom the distinction between fact and fiction, true and false, no longer exists.’ A world where you can’t trust anyone.

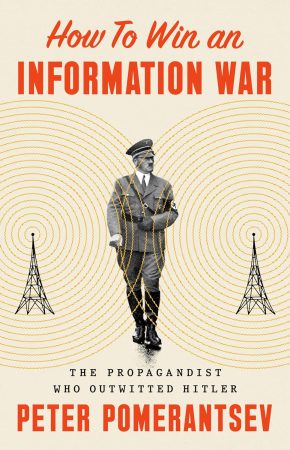

In his new book, How to Win an Information War, Peter Pomerantsev explained that in WWII German media had been compromised by the state. Wary of foreign influence (cough TikTok), the Nazis made listening to the BBC punishable by hanging. In 1941 Germans started tuning into a local station called Gustav Siegfried Eins. It used the sonic branding of the Nazi news, but featured a seditious voice who swore incessantly and used racial slurs. He loved the army but hated Nazis, because they were corrupt and lying to the people. Long, interesting story short, it was created by the British Political Warfare Executive, voiced by a German novelist of Jewish descent, and recorded in a stately home in Bedfordshire. It was part of an effort that corralled ‘artists, academics, spies, soldiers, astrologists and forgers’, including Bond creator Ian Fleming, into using creativity for the war effort [astrologists?].

According to one participant this was because the Allies didn’t want anyone to think the ‘war had been won with a trick’. Reductive hyperbole aside, this trick, like advertising, used creativity to try to create change in the world using a trusted voice.

[Is it too much of a stretch to suggest brands begin to accrue value only when they are trusted?]

More information is less important than trusted voices

We know emotions are more persuasive than facts in advertising. The same holds true in propaganda. Facts don’t change minds…

… but creativity can.

Conspiracy theories and propaganda feed on the lonely and dispossessed and, according to ‘all the research’, we have less trust and more loneliness than ever before. Perhaps ideas like Reform Political Advertising can help with their guidelines suggesting ‘electoral advertising should be regulated so that fact-based claims are accurate. We’re not interested in party policy or political arguments, but we are very interested indeed when data, events and identities are manipulated, ignored or invented.’

The structural problems of widening inequality, a sclerotic political system, and the overwhelming sense of helplessness felt by some people, are the seedbeds that manipulative misinformation flourishes in.

Those are big challenges. Perhaps the ad industry can help in some small way, by extending our self-regulatory regime into political advertising. Perhaps, but not in time this year. I suspect from the anecdote up top that no one is prepared for how weird it is going to be.

I suggest that we use our professional skills to decode the political speech we encounter this year. I believe creativity will be more useful in fighting an information war than fact checking. We will learn something about the world when we find out what happens, because democracy only works when we trust the process.

Featured image: Library of Congress / Unsplash